Agile Is Not A Silver Bullet, Part 2

In my first post, I wrote about the responsibilities, goals, and struggles that development teams are facing today. In this post, I am covering our experiences and how we must take a bigger picture look at how we’re working in a world of constantly changing requirements.

Our Experience

So, I seemed to paint a lot of doom and gloom in my last post. As you might expect, I am not suggesting we just throw up our hands and say “Well, that’s just the reality of software development! Deal with it.”

When we launched Nebraska Global and Don’t Panic Labs in 2010, we were already using a lot of agile project management techniques. However, we were still suffering from an inability to “maintain a constant pace indefinitely” and we were committed to doing something about it. Before I get into our outcomes, let me give you some perspective on what we have done since 2010:

- We have worked on dozens of greenfield software products including our own Beehive Industries, EliteForm, and Ocuvera products.

- The team who built these products has been relatively small. We have 33 software engineers across Nebraska Global, with 17 of them being in Don’t Panic Labs. This count has steadily increased from the 12 engineers we started with in the spring of 2010.

- While we have a decent balance of youth and experience, we have numerous individuals who have 15+ years of experience and much of that experience in the area of software product development.

- From day one, these experienced leaders of the software development team have had a strong devotion to proven principles and patterns – or at least a strong passion to adopt and leverage said principles and patterns.

The net result is that the last seven years have been an amazing crucible for rapid learning and iteration on how we approach software design and development.

So what has been the result of this learning and iteration? At the risk of sounding hyperbolic, we have been solving the most complex problems most of us have ever worked on while achieving the best quality we’ve ever seen, all while maintaining the “constant pace” that enables sustainable business agility.

Specifically, we have:

- Implemented a consistent methodology for software architecture and design that embraces change

- Proven sustainable development velocity is achievable – some of our systems under development have code bases that are over seven years old

- Maintained continuous attention to technical excellence and good design through processes and mentoring, which has enabled consistent adoption of our software design principles and patterns across all projects

Here Come The Zealots!

I can almost hear the questions and challenges to this idea that we need to invest more in software design and architecture to be truly agile:

“We can’t afford to do this now, we need to get the MVP out!”

“No one does design upfront – it’s not agile!”

“We can’t begin to architect a solution when the only certainty is that functionality will change.”

“If this gets traction we will come back later and re-architect or refactor it correctly.”

The last one is particularly fascinating to me. Think about what that is saying: If our hacked together MVP (minimum viable product) starts taking off, we will stop what we are doing and re-design it correctly. This is a trap, plain and simple. In my experience, the only scenario where you will have the time to be able to re-design an MVP is if you are NOT getting traction. Any project I’ve worked on that customers cared about was driven to add more and more features. You just aren’t going to get too many second chances on a system design if your product has initial success.

Enter The Design Stamina Hypothesis

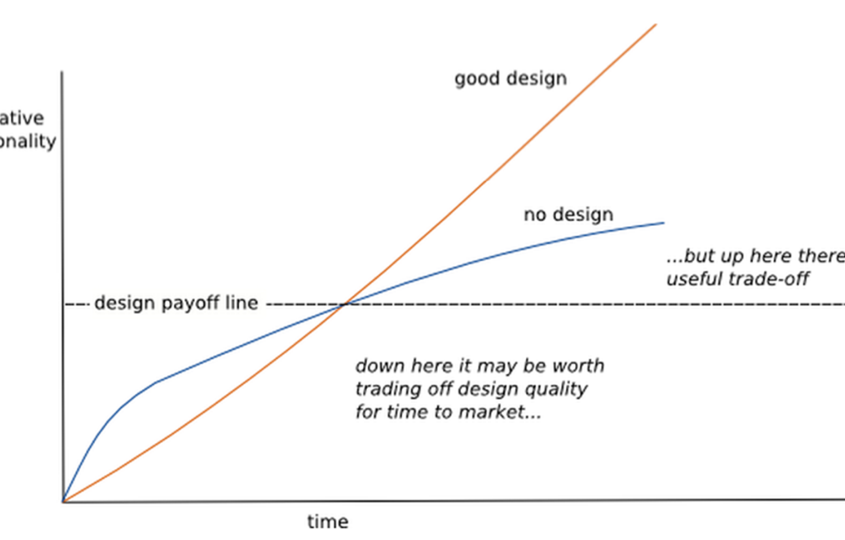

Martin Fowler wrote a blog post a few years back that really captured, both in words and visually, the argument against the “code and fix” mentality of a lot of development teams. He makes the argument that while there is an initial advantage, in terms of throughput, for a “code and fix” team over a team that is doing “good design”, there will be a crossover point where the “code and fix” team starts to lose velocity and the “good design” team continues with a “constant pace”.

The image below is taken from Fowler’s post:

He goes on to argue that that crossover point is usually weeks into a project, not months. My own personal experience confirms the legitimacy of this phenomena and I suspect if teams were honest with themselves they would also recognize this in their own experiences.

Designing For Change

One argument I have heard is that it is not realistic to design a system when so much is unknown due to changing requirements and insights we will gain from customers that may drive a product in directions different from what we understand today.

My answer to this is pretty simple: this is only a problem if you are not designing for change.

This notion of encapsulating future change has been around for quite some time and is pretty common in non-software system design. It requires that areas of likely change need to be isolated from the rest of the system so that when a change in requirements does occur, its impact is limited to a single module, service or class. The advantage of this approach is that you don’t need to know the specifics of the future changes; you need to just recognize that change is likely.

Here are some examples of areas of likely change or volatility:

- Data storage and access

- Workflow/sequence

- External service and hardware dependencies

- Business rules and algorithms

- Difficult design and construction areas

The driving principles behind this methodology are derived from David Parnas’ seminal 1972 paper where he introduces the concept of Information Hiding that promoted, among other things, decomposing systems by hiding “arbitrary” design decisions behind static interfaces.

The end result becomes a system that literally embraces change as opposed to one that is rigid and fragile in the face of change. There are other benefits as well:

- Reduces the coupling and increases the cohesion of modules, services, and classes

- Creates systems that are highly testable

- Tends to naturally incorporate widely accepted design principles like SOLID

- Reduces the “field of view” for a developer when she is making changes to the system thus reducing the likelihood of unintended changes in behavior

- Results in a system that is easier for developers to comprehend and follow

Bottom Line

We live in a world of changing requirements and businesses rely on us be able to function in this world. It is our responsibility to enable business agility.

Software can rot as a result of requirements change, lack of coherent design, and developer’s lack of familiarity with the original design philosophy.

The bottom line is this: software design always happens. You are either doing it:

- proactively by following good software design principles and methods and informed by a conceptual design for the entire system and recognizing the likely areas of change, or

- in real-time as you are coding without fully understanding the implications of each design decision that occurs in isolation.

If you and your team choose to forgo a disciplined approach to development and maintenance, expect software entropy to happen. There is no shortcut or magic framework that can save you. You will not be able to maintain a “constant pace”. Allowing chaos and poor design to rule means your organization will have reduced business agility over time.

You need to ask yourself if you want your design to be random or if you want it to be coherent and consistent and able to embrace change? The answer is important because the success of your product or business and the health of your development culture depend on it.

This post was originally published on the Don’t Panic Labs blog.