It’s very easy to throw terms around in our (or any) industry. In our hurried culture, we latch onto words or phrases that may not fully encapsulate their original intent. I’m afraid that is what has happened to the label of “software engineer”. And it has not been without consequences.

I believe the real, working definition of what a software engineer truly is has been diluted. Some of this could be that the title has been overused by people who have little knowledge of what makes an engineer an engineer. Part of it, too, could be that it has become a marketing or recruiting phrase (let’s be honest, just stating you have “programmers” or “developers” doesn’t have the same prestige as “software engineer”). As soon as one company begins using “software engineer” to describe their employees, the floodgates are opened for other businesses to begin doing the same. The term quickly becomes meaningless.

Software development is still a relatively young industry and is one that has evolved quickly during its short history. So, it’s not surprising that we have informally adopted varying terminology. But as our industry continues to mature with formal accreditations and recognition of skills, it’s important to be aware of what we’re implying with the words we use and titles we assign (especially when we begin appropriating terminology from existing disciplines for use in our own industry).

What I’d like to cover here is what we at Don’t Panic Labs believe a software engineer is and what we mean when we say it. Hopefully, this will force us to be more deliberate about its definition and highlight the differences that separate developers from software engineers. And even more, we can bring some awareness to what we call ourselves and the potential ramifications of doing so incorrectly.

A Little History

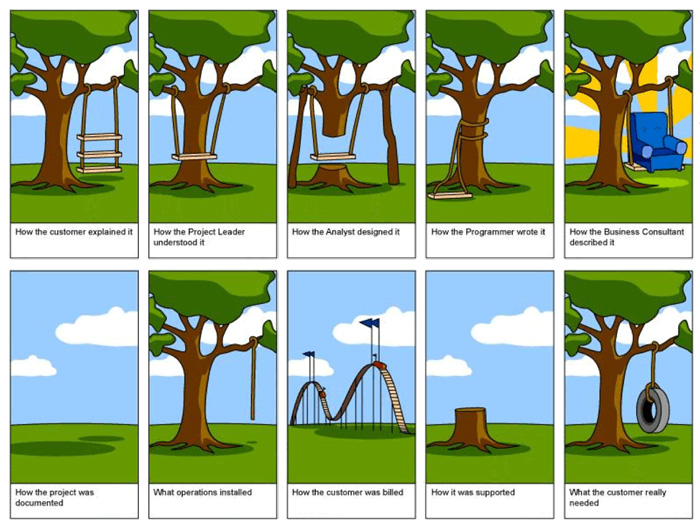

In the short history of software development, the act of writing code has suffered from a lack of awareness around what is truly required to create a functional, understandable, and maintainable codebase. In some ways, this is due to the industry seeing developers as just coders.

Since the beginning, we, as an industry, have had little understanding of what solid software design principles look like in action. We code it, release it, patch it, update it with new features, and then repeat the process. Code and fix. Code and fix.

But that all comes with a cost. In the 1980s, a few people began advancing ideas that software development should be treated as a discipline like any other engineering-based vocation. Sadly, it hasn’t caught on as quickly as it should have.

Regardless of how far we’ve come, we still have an identification problem when we label all developers as “software engineers”. It’s not only unfair to actual engineers, it implies something that is not true.

As we at Don’t Panic Labs see it, you have four basic levels: the developer, the software engineer, the senior software engineer, and the lead software engineer/software architect.

The Software Developer

The definition of a software developer is the widest of the four we’re covering here. At the risk of sounding like I’m reducing the role of developer, what I’m listing here is what I consider the bare minimum of what we’d consider a developer at Don’t Panic Labs.

We see the act of writing code analogous to the process of manufacturing a particular product. While it requires specialized knowledge and skills, it does not involve any design. Developers are the construction workers, the heavy lifters, the folks who bring the ideas into reality.

A developer is a person who understands the programming languages used, has a grasp of coding best practices, and knows the tech stack of their project’s requirements. However, they are not the people who created the plans and thought through the various scenarios that could arise.

The Software Engineer

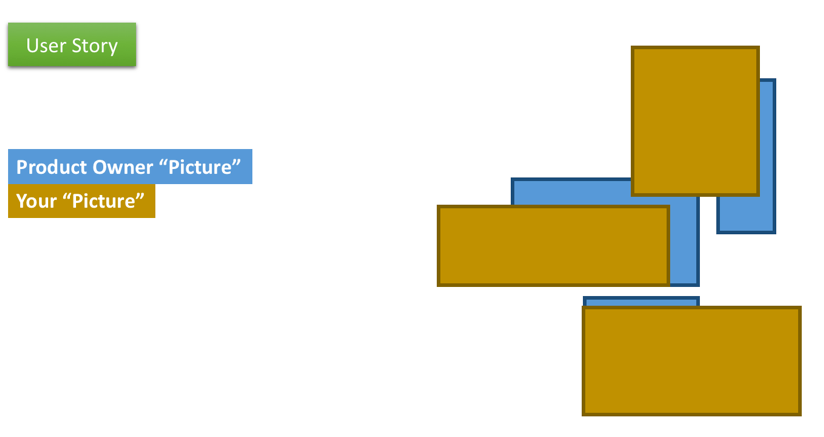

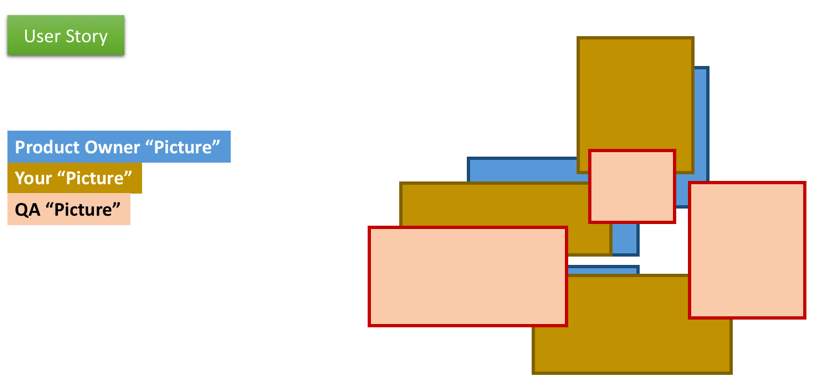

To me, the difference between a developer and a software engineer is analogous to the difference between the person working on the factory floor (the developer) and the manufacturing supervisor. While the former does the actual work, the latter ensures the directions and plans provided for manufacturing are correctly executed and that any ambiguities or adjustments are identified and dealt with. To perform in this role, it is essential to be able to communicate and collaborate as well as possess a working knowledge of design principles and best practices.

In our world of software, the role of software engineer includes all of the software developer’s skills as well as consistently demonstrating great attention to detail. This person also looks for opportunities to use and execute appropriate engineering techniques such as automated tests, code reviews, design analysis, and software design principles.

From a maturity standpoint, the software engineer is also someone who recognizes when their inexperience is a factor and proactively reaches out to more senior engineers for assistance or insight. This is where one’s ability to collaborate and communicate should be evident.

The Senior Software Engineer

A senior software engineer must be all that is listed above, but also be someone who can mentor younger programmers and engineers (e.g., young hires, interns) to improve their skillsets and understand what goes into design/architecture decisions. They also help fill in the gaps left by an education system that focuses on programming skills and not software design knowledge. To do this, they need the ability to articulate and advocate design principles and recognize risks within a design and project.

A senior software engineer is also expected to be able to autonomously take a customer problem all the way from identification to solution and is uncompromising when it comes to quality and ensuring integrity of the system design.

Senior software engineers are your best programmers. But just because a person is a great programmer does not mean they can decompose a system into smaller chunks that can then be turned into a solution. That is the main requirement of a lead software engineer / software architect.

The Lead Software Engineer / Software Architect

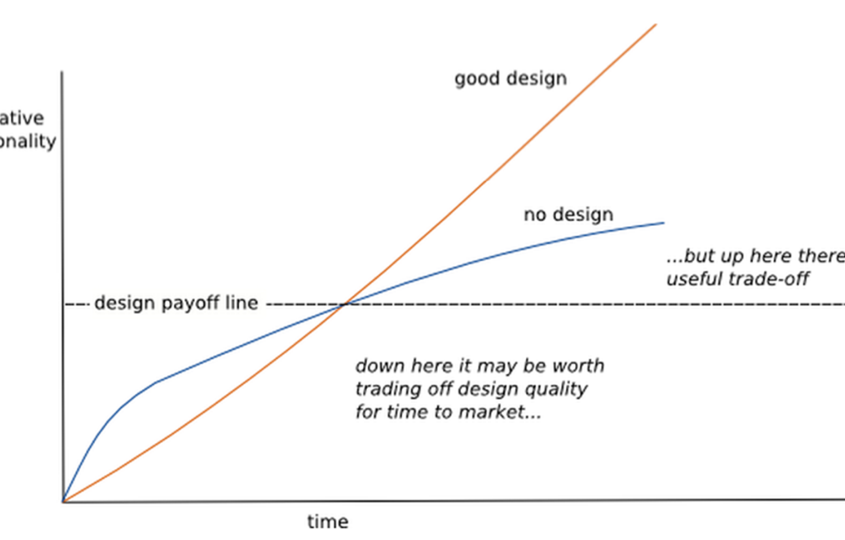

What makes a lead software engineer/software architect is the thinking, the considerations, the design behind the code, the identification of tradeoffs, and all of what must come together before any code is written.

Being a lead software engineer is not so much about “fingers on the keyboard”. Their focus is on the design of the system that will get fingers on the keyboard later. But before any of that happens, the lead software engineer must scrutinize and evaluate all big picture elements. The design that comes from this effort should produce a clear view into all the considerations, anticipate most of the problems that could come up, help ensure that best practices will be adhered to along the way, and, maybe most critically, enable the system to be maintainable and extensible as new and changing requirements appear. In other words, the design must enable sustainable business agility.

Once the system design (or software architecture) is in place, it is the lead engineer who owns the “big picture” and ensures that the development and detailed design decisions that ensues is consistent with the intent and principles behind the original design. Without the constant nurturing and maintenance of overall design integrity, the quality of the system design will rapidly decay. I strongly believe this responsibility should not be shared but rather should be owned by the lead software engineer.

The Future

So now that I’ve laid out how we at Don’t Panic Labs view developers and engineers, there’s something else we believe is also important and must be addressed if we are to move forward as an industry: education.

I often speak about how we aren’t educating our future developers and engineers. I’ve even written a post about how we’re failing. The problems we will be solving in the future (or now, one could argue) will require more than the “construction worker” developers we’re cranking out of our educational institutions today. While we need our developers to construct the code, we need more engineers equipped and educated to effectively think about that code before a single keystroke lands. As the complexity of our systems increases, so too is our need to ensure we’re anticipating the problems we may come up against and building sustainable systems.

This is no different than if – using building construction as an analogy – we only were educating our carpenters, plumbers, electricians, and welders without considering the need for engineers who create the plans for these folks to follow. As technologies and materials improve, engineers must be able to leverage these advances and make the necessary adjustments. Otherwise, workers will be left using the same old methods or, worse, making their own decisions in a vacuum and possibly creating a worse (or dangerous) situation for everyone. Without the high-level vision and insight provided by an engineer, the whole industry is held back.

As our world continues to run at full speed toward a future more reliant on ever-evolving technologies, the need for properly-trained engineers (who are educated based on a standardized set of requirements) will continue to grow.

And with that comes the need for a better understanding of the roles that comprise development teams. Whether one’s role is a developer, software engineer, senior software engineer, or software architect, the distinctions are as important as the work they do. We already know that following the current path inevitably leads to chaos.

That’s something our world cannot afford.

This post was originally published on the Don’t Panic Labs blog.