The Danger of Incomplete Pictures, Part 3: A Framework for Structured Critical Thinking

Have you ever wrestled with a problem in your mind and then, while trying to explain it to someone else, had an epiphany of how to solve it? This has happened to me on numerous occasions.

Or have you ever jumped in to develop some code for a piece of business logic that you felt you completely understood only to find unanticipated aspects of the business case that require you to get clarification or – worse – start over? Unfortunately, I have also experienced this – more undesirable – scenario.

In my third and final post of my series on The Danger of Incomplete Pictures (Part 1, Part 2), I am going to talk about this phenomenon and share some thoughts on how we, as developers, can do a better job of transitioning from requirements to coding.

With any design and development effort (i.e., not just software), you begin with more abstract concepts and progress to more concrete, explicit details. Along this journey, you make discoveries and gain insights that were not accessible at the project’s early stages.

For me, uncovering hidden assumptions and details is one of the more rewarding aspects of engineering. Every time I identify some behavior or detail that was not adequately specified, I feel like I’m gaining more understanding of the system (and, consequently, fewer of these hidden behaviors will be discovered by someone else!)

This process of gaining increased understanding and insight is an inevitable consequence of building complex systems. The sooner we gain these insights, the better it will be for us and the ultimate users of the system. In my experience, these insights are gained throughout the various phases of product development:

- Prior to the construction phase

- Defining the user stories/epics

- Decomposing the system into components and services

- Upon commencement of construction

- Implementing the features

- Testing our own work

- Demonstrating progress

- User acceptance and quality assurance testing by others

- Customer use of the system

It is impossible to completely understand a system prior to development (see my post Developing Software Products in a World of Gray), but we should strive to identify the majority of these insights earlier in our projects. It is much easier to change or enhance a story definition or a design plan prior to construction than it is to change the implementation of a design.

Unfortunately, the second scenario in my introduction (where insights are gained during construction) is an all-too-common occurrence in software development teams. In fact, I fear that most teams gain the majority of their insights during the construction phase. I am confident this is the cause of many schedule and cost issues with software projects, not to mention the technical debt that might also be added.

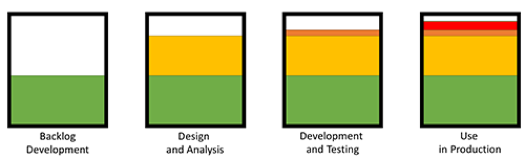

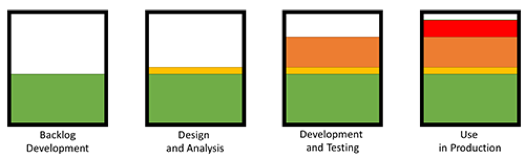

If we think about the collective understanding of a system, we might visualize it as a bucket or container. Once that container is completely full, it represents a thorough understanding of the details and requirements of a system. We add to the bucket as we gain insight and clarity through the various phases of development.

In a healthy development process, the vast majority of significant insights (i.e., insights that can drive decisions that are hard to change later, such as architectural) are identified prior to the construction, or “manufacturing,” phase. Insights discovered during this phase are less disruptive, and do not carry the same risk and cost profile of insights that are identified later on during construction, or after the product is in the hands of the end user.

Again, I should emphasize that there is really no technique that provides perfect insight prior to the construction of systems that have even a modest level of complexity. In my early experiences as a Systems Engineer at McDonnell Douglas in the late 80s, our requirements analysis process was full-on waterfall. We often spent months and had many meetings to attempt to fully understand the requirements and reduce risk on these projects. By and large, our process worked but it took a long, long time and – like other waterfall experiences – did not easily accommodate the insights that would be gained during the construction and testing phases.

Even though our team (rightly) abandoned waterfall, it is my feeling that we as an industry have thrown the baby out with the bath water when it comes to the role of critical thinking. The second scenario in my introduction highlights this overreaction to waterfall: jumping straight from user story to code. When development is done this way, the insight profile tends to look more like the picture below where a significant amount of insight is gained late in the development process.

One of my challenges here at Don’t Panic Labs has been to effectively – and efficiently – introduce disciplined critical thinking into our development process prior to the construction phase. I wanted to achieve the benefits that I saw with waterfall, but in a lean/agile way. Coupled with this requirement was the need to create a process that was accessible to a variety of engineers with different levels of experience (including our interns).

In my experience, critical thinking occurs best in situations like the first scenario in my introduction. There is something that happens within our minds when we are forced to articulate (verbally or in writing) an abstract concept in explicit detail. My own experience with the waterfall approach shows that it is effective in promoting critically thinking. I wanted to capture the essence of that, but in a way that made sense for our lean/agile environment.

To facilitate critical thought in our projects, we came up with the concept of having our engineers write “white papers” based around this set of loose rules:

- Spend about one hour, but no more than two, to describe the implementation requirements of the particular story or feature the engineer is analyzing.

- Use whatever method for description that makes sense to the engineer (e.g., drawings, words, diagrams, etc.)

- Circulate this white paper amongst stakeholders to gather feedback and additional insights. These stakeholders would be product owners, development leads, project managers, QA folks, etc.

Because we were not interested in creating process for process’ sake, we also gave some thought to when it was a good use of time and effort to go through the “white paper” process:

- When the story presented a particularly challenging area of design and construction with some perceived complexity.

- When the development lead or engineer felt it warranted some deeper thought.

- When we had a less-experienced developer (or intern).

We began using this technique with some good results. Dev leads, project managers, and product owners appreciated the feedback loop prior to commencement of development. The only problem with this particular model was that there was – by design – no structure to what was required for the contents or output of this process. We simply wanted to give the developers time to think critically before jumping into code.

But as a result of this lack of structure, we often spent a fair amount of time explaining what was expected and what the engineer should be thinking about. So I went back to the drawing board.

I had an epiphany during the revision process: Why not develop a series of questions designed to get the engineer thinking about specific aspects of this feature? If the goal of this exercise was to answer questions about the feature, this structure seemed like a good way to prompt the engineer.

I also decided to explicitly ask for the specific, discrete development tasks that were envisioned to complete the feature. This task list was meant to be the final thing developed in the document. I also renamed the document to “Design Analysis Document” to be more descriptive of the activity.

The guidance we currently give is similar to the original, with only slight modifications:

- Spend about one hour, but no more than two, to describe the implementation requirements of the particular story or feature the engineer is analyzing.

- Use a provided template of questions (and associated guidance) as a framework for thinking through the problem and add whatever method of description that makes sense to the engineer (e.g., drawings, words, diagrams, etc.)

- Note: It is not required to answer every question.

- Include the specific sequence of implementation tasks (with estimates) for the story/feature.

- Circulate this design analysis document amongst stakeholders to get their feedback and additional insights.

- These stakeholders would be product owners, development leads, project managers, QA folks, etc.

We now find ourselves tweaking the questions in this template. Email me if you are interested in seeing this template.

Any system that provides value will change and evolve over time; that is natural. We know how to design systems to embrace change so that our architecture and design do not become unmaintainable.

This blog series has not been about the impact of requirements changing over time but about the changes that result from missed requirements that stem from hidden assumptions. These assumptions inevitably reveal themselves during development or production, and they can be extremely disruptive and costly to resolve.

If we call ourselves software engineers, we must think critically and leverage the types of structured processes engineers in other industries use to minimize the likelihood of missed requirements and hidden assumptions.

I hope this has provided some food for thought and some tools that you can use in your day to day work. As always, I’d love to hear your thoughts, reactions, and ideas for how you would improve upon what I have presented.